... to run a LLM on his OnePlus6 running postmarketOS

Inspired by the recent postmarketOS podcast episode with magdesign, I set out to run a large language model (LLM) on my OnePlus6 running postmarketOS. I had previously experimented with a few offline LLMs, and already have a preferred candidate.

Quick Links

Setup the LLM

Run the following commands on your OnePlus 6 running postmarketOS.

sudo apk add git

git clone https://github.com/antimatter15/alpaca.cpp.git

cd alpaca.cpp

sudo apk add cmake

cmake .

cmake --build . --config Release

My first attempt at building alpaca.cpp using make and g++ failed. This method worked on a Linux desktop (x64), but pmOS on ARM is something else. To get it to work, I tried using cmake and following the instructions provided to build from source for Windows. That worked like a charm.

Download the following Model Weights into the alpaca.cpp folder. They are 4GB and 8GB in size respectively. You only need the 4GB version if you are testing, but the 8GB version was trained on more data and will produce better results. Conversely, it will also take more time to reply to your answers.

- ggml-alpaca-7b-q4.bin (available via antimatter15 github)

- ggml-alpaca-13b-q4.bin (available over torrent)

Run the 7b (light) model

./chat -m ggml-alpaca-7b-q4.bin

Run the 13b (heavier) model

./chat -m ggml-alpaca-13b-q4.bin

If you only have the 7b (light) model, you can simply run the following command.

./chat

Ask away! There are a few other methods to access the contents of the alpaca LLMs, but this is by far the easiest method. I would love to see this offline LLM incorporated into the LLM chat app Bavarder. Bavarder is still in early stages of development and has it's share of bugs but has the benefit of being mobile-friendly.

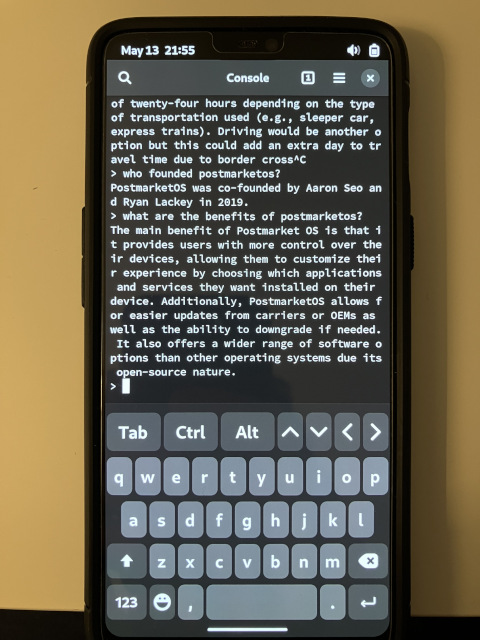

Alpaca answering some questions about postmarketOS

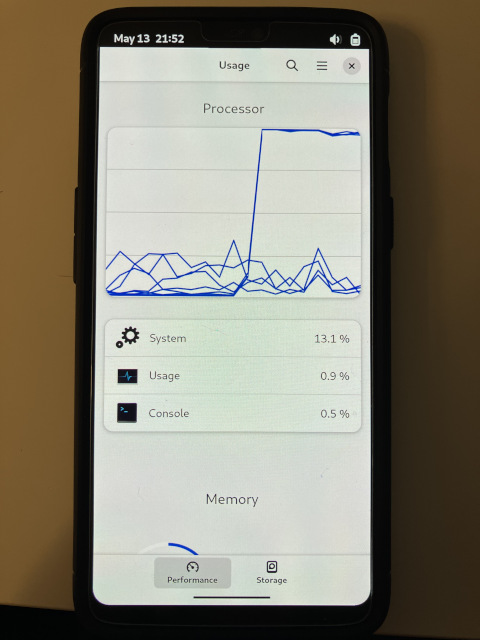

Alpaca using every last bit of power on the OnePlus 6